The Mathematics of "Which Character Are You?"

Using AI embeddings to create personality quizzes that actually make sense

Welcome back to Better Know a Dataset!

In today's post, we're going to do something a little different — instead of analyzing an existing dataset, we'll generate our own using large language models (LLMs) to tackle a ubiquitous internet phenomenon: personality quizzes.

Comparisons Galore

If you're like me, you've probably taken more than your fair share of "Which Celebrity Are You?" quizzes. You know the ones — answer 10 random questions about your breakfast preferences and sleep habits, only to discover you're apparently Taylor Swift (though you always saw yourself as more of a Beyoncé).

In fact, we live in a world full of comparisons. We want to know if we're more of a Rachel or a Monica, a winter or a spring, and a dog or a cat. Spotify needs to figure out if that indie song you like is more similar to Arctic Monkeys or The Strokes. Netflix has to decide if you, having just finished "The Crown," would prefer another show about British royalty or perhaps a pivot to a different kind of drama about rich people being dramatic.

But here's the thing about all these comparisons — it’s surprisingly hard to do them systematically. Sure, you could argue that an apple is more similar to an orange than to a banana because they're both round. But you could also argue that the banana is more similar to the apple because they're both sweet. Or you can also bring up the fact that bananas and oranges are both tropical fruits. Which is correct? We don't have a conclusive way to answer this. It's not like measuring height or weight where we can just pull out a ruler or a scale.

A simple solution to this problem is to convert things into numbers. Let's stick with our fruit example (I promise this is going somewhere interesting). We could choose a set of relevant categories — color, sweetness, tartness, size — and assign a score to each category. An apple might score 7/10 on sweetness, 6/10 on tartness, and be predominantly red in color. A banana might score 8/10 on sweetness, 1/10 on tartness, and be yellow. Now we have a numerical way to compare them.

This approach of converting things into numbers is already common in many areas. Dating apps turn people into numerical scores (outdoorsy: 8/10, introverted: 3/10, bedtime: 11PM) to find matches. Recruiters score job candidates on experience, skills, and leadership. Wine critics rate wines on body, acidity, and tannins. In each case, we're converting complex things into comparable numbers.1

The Incredible Versatility of Embeddings

While this manual scoring system works, it has some significant limitations. First, choosing which characteristics to measure is itself a challenge - how do we know we're picking the right ones? Second, having humans assign all these scores is time-consuming and potentially biased. What if we could automate this process of converting things into meaningful numbers?

This is where embeddings come in. At their core, embeddings are a way to represent words, sentences, or any piece of information as lists of numbers that capture their meaning. Just as we can describe fruits using numbers for sweetness and size, embeddings describe things using hundreds of numerical values — but with a key difference. Rather than having humans manually select the characteristics to measure (like sweetness or outdoors-iness), we use large language models to automatically learn what characteristics matter.

These models analyze how words appear in context — essentially, looking at which words tend to appear around each other in large amounts of text. Each word gets converted into hundreds of numbers that capture its semantic properties. So you can think of a word's embedding as representing the probabilities of what words might appear around it. For example, the numerical representation of "apple" is influenced by how often it appears near words like "fruit," "sweet," "pie," or "orchard." When words are used in similar contexts, they end up with similar numerical patterns in their embeddings.

Once we have these numerical representations, we can perform mathematical operations like measuring similarity between words, adding and subtracting meaning vectors, or finding clusters of related concepts. The classic example is that embeddings can capture analogies through vector arithmetic. If you take the embedding for "king," subtract the embedding for "man," and add the embedding for "woman," you get something very close, numerically, to the embedding for "queen."

Similarly, "Paris" minus "France" plus "Japan" gives you something close to "Tokyo.

The cool thing is that these relationships were not explicitly programmed, but rather they emerge from the patterns in language that the embedding model learns.

In addition to doing cool arithmetic, we can also use these numerical representations to measure similarity between any two things by comparing their embeddings. The standard way to do this is using cosine similarity, which essentially measures the angle between two embedding vectors. The smaller the angle, the more similar the concepts. Think of it like two friends trying to point at something. If they're pointing in exactly the same direction, that's maximum similarity (cosine of 1). If one's pointing at the sky while the other's pointing at the ground, that's minimum similarity (cosine of -1). And if one's pointing east while the other points north, they're somewhat related but not quite aligned (cosine of 0).2

This property makes embeddings particularly useful for applications like those "What ___ Are You?" personality quizzes. When you answer questions about yourself, those answers can be converted into an embedding. Then we can compare your embedding with embeddings of different categories (animals, historical figures, etc.) to find the closest matches. Your responses might create a pattern that's numerically similar to both a wolf (independent, territorial) and Napoleon (ambitious, strategic).

Modern embedding techniques can handle not just single words but entire pieces of text. When we feed a description into an embedding model, it analyzes the patterns and converts them into numbers that capture subtle meanings and relationships. This means we can take detailed descriptions of anything — animals, historical figures, paintings, job descriptions, research papers — convert them all into the same kind of numerical space, and find meaningful similarities.

Building Our Dataset

The first challenge is deciding what to include in our comparisons. I want entities that would be widely known but diverse enough to capture different kinds of personalities and characteristics. My solution is to ask ChatGPT to generate three lists of 30 items each: (1) animals everyone knows about, (2) universally recognized historical figures, and (3) famous paintings.

Here's what it came up with:

But having the lists is not enough — we need rich, consistent descriptions of each item that would capture their essential characteristics. And this is precisely what language models are good at, with the help of some careful prompt engineering. So for each category, I created prompts that would generate comparable descriptions while avoiding superficial similarities:

Generate a 500-word summary of {animal name} focusing on (i) Physical appearance, (ii) Behavioral Characteristics, and (iii) Social Structures and Relationships. In your explanation, refer to the animal as "this animal" rather than {animal name}.

Generate a 500-word summary of {figure name} focusing on (i) Major Contributions or Achievements, (ii) Historical Significance, and (iii) Legacy and Impact.. In your explanation, refer to the animal as "this figure" rather than {figure name}.

Generate a 500-word summary of {painting name} focusing on (i) Artistic Style and Technique, (ii) Historical and Cultural Significance, and (iii) Key Themes and Interpretations. In your explanation, refer to the animal as "this painting" rather than {painting name}.

There are a few things that crossed my mind when designing these prompts. First I had to make sure that the prompt focuses on persistent characteristics rather than specific events or surface details. I also made sure to maintain parallel structure across categories (e.g., "behavior" in animals maps to "achievements" in historical figures) with similar length and details. It was also important to avoid using the item's name in the description, preventing the embedding from being skewed by the name itself.

Using these prompts with the OpenAI API, I generated detailed descriptions for all 90 items. Each description was then converted into an embedding, once again using the OpenAI API. At this point, we now have a dataset where every animal, historical figure, and painting is represented by the same kind of mathematical object, making them directly comparable.

ChatGPT-Generated Description of “Chicken”

Just to illustrate how this works, let me show one example. I asked ChatGPT to describe a chicken, which generated this wonderfully thorough description:

This animal, a domesticated bird commonly raised for its meat and eggs, has distinct physical characteristics that make it easily recognizable. Typically, this animal features a rounded body covered in feathers that can vary significantly in color and texture, ranging from white and brown to black and even speckled. The size can vary depending on the breed, with some individuals being relatively small and others quite large. This animal has a short, stout neck that supports a small head, characterized by a prominent beak adapted for pecking at food and foraging. The head is adorned with unique features such as a comb—a fleshy crest on the top—which varies in shape and size among breeds, as well as wattles that hang beneath the beak. This animal possesses strong legs that support its body, complete with three forward-facing toes and one backward-facing toe, which assist in both scratching the ground for food and providing balance while walking or running.

In terms of behavioral characteristics, this animal is known for its varied and complex behaviors. Generally, this animal exhibits curiosity and intelligence, often engaging in foraging behaviors where they scratch at the ground and peck at seeds, insects, and other food sources. Socially, this animal communicates using a wide range of vocalizations, including clucks, squawks, and crows, each serving different purposes, such as alerting the flock to danger or signaling the presence of food. This animal can exhibit strong maternal instincts, especially among hens, who will often fiercely protect their chicks. Playful behaviors are also evident, with young chicks engaging in various social games that aid in their development. Additionally, this animal is known to perform dust-bathing, which helps maintain feather health and provides comfort.

Social structures and relationships within this animal's groups are intricate and dynamic. Typically found in flocks, this animal establishes a pecking order, which dictates social hierarchy and access to resources like food and nesting sites. The dominant individuals, often the larger and more robust members, will assert their status through behavior such as standing tall and displaying their feathers. However, this animal also shows cooperative behaviors, such as roosting together for safety and foraging in groups. The bonds within these flocks are notable; individuals recognize each other and can form social bonds that improve cohesion and community dynamics. Maternal bonds are particularly strong, with hens forming nurturing relationships with their chicks, allowing them to learn and adapt within the social structure. Through these various interactions, this animal demonstrates a remarkable capacity for social organization, providing them with protection and enhancing their survival in both wild and domestic settings. Overall, this animal exhibits multifaceted characteristics that contribute significantly to their adaptability and success as a domesticated species.

You can see that ChatGPT does an exceptional job of capturing the essence of chicken-ness. And the thoroughness of the information gives the embedding model rich semantic material to work with. When we convert this description into numbers, we're not just capturing "bird with feathers" – we're capturing complex concepts like social hierarchy, maternal care, and group dynamics. This means our embeddings can find meaningful similarities not just with other animals, but with human social structures, organizational systems, or even historical figures. (Maybe there's a reason we use "pecking order" to describe office politics!)

Now when we convert this description to an embedding, we get the following vector of length 1536, which is the default length for OpenAI’s text-embedding-3-small:

What does 0.072 mean? Why -0.001? It's tempting to try to interpret each number as representing some specific concept – maybe one dimension represents "size" and another "friendliness." But that's not quite how it works. These numbers exist in what mathematicians call a vector space, and while each dimension does represent something, it's not something we can easily label or understand.

But here's the beautiful thing: we don't need to understand what each number means. What matters is that these numbers create a space where similar things end up close together and different things end up far apart. Think of it like a huge cosmic dance floor where each thing – whether it's a chicken, Napoleon, or the Mona Lisa – finds its place based on its characteristics. We can't really understand why each dancer is standing exactly where they are, but we can see who's dancing near whom.3

Analyzing Similarity

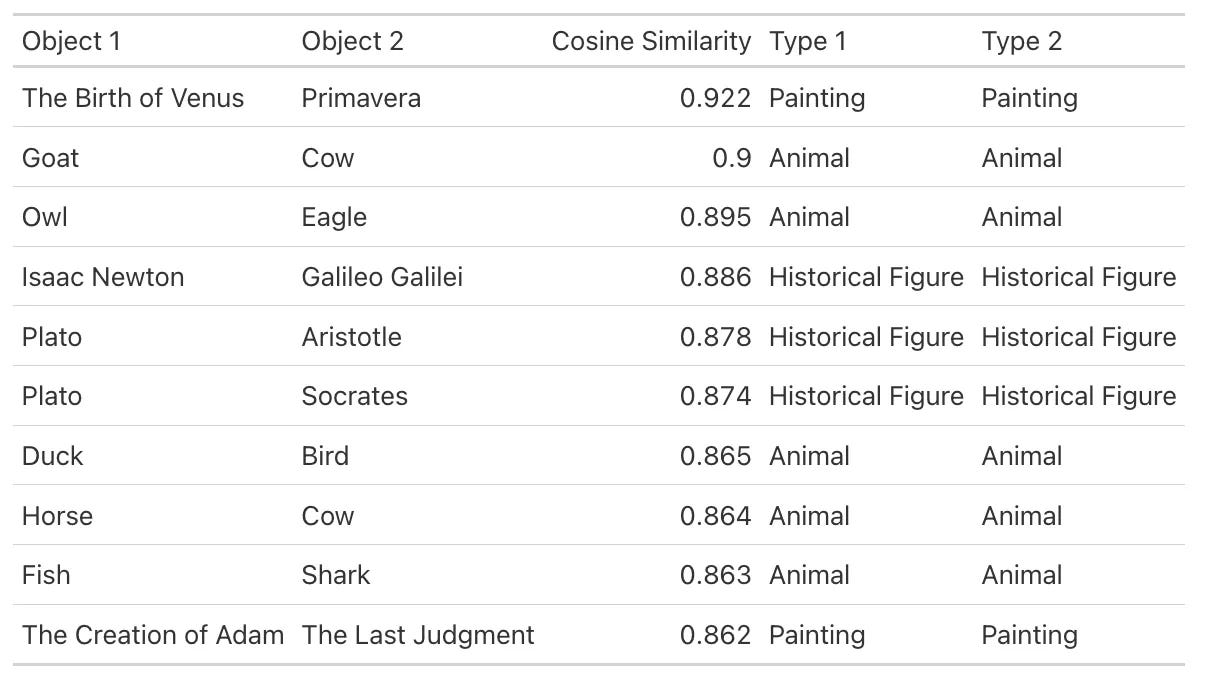

At this point, we have the numerical representation of each item in each category. Let’s first take a look at the pairs with the highest cosine similarity, irrespective of the category:

Not surprisingly, the highest similarities occur between items of the same category. In art, we see Renaissance masterpieces sharing themes: "The Birth of Venus" and "Primavera" have the highest similarity (0.922). For animals, there’s a natural grouping of farm animals (goat and cow) and birds of prey (owl and eagle). For historical figures, scientists tend to cluster together (Newton and Galileo) and ancient Greek philosophers also form a tight group (Plato, Aristotle, and Socrates).

This makes intuitive sense — items that share more contextual and conceptual similarities end up closer together in their numerical representations.

Now let’s look at the pairs with the lowest cosine similarity, this time focusing on comparisons within the same category:

Two things stand out here. First, even the "most different" pairs still have positive similarities, with none dropping below 0.29. This is probably because sharing the category of "historical figure" creates some baseline similarity in how they're described - after all, they're all humans who made notable impacts on history.

Second, these lowest similarities are all between historical figures, while animals (like sharks vs rabbits) or paintings (like The Scream vs The Birth of Venus) never appear among the most dissimilar pairs. This suggests that historical figures have more potential for divergence in their descriptions — perhaps because human achievement can vary more widely than biological characteristics or artistic elements. While all animals share basic needs and behaviors, and all paintings share fundamental artistic properties, human accomplishments can differ dramatically in their nature and context.

The real fun begins when we look across categories. Here are the most similar pairs from different categories:

This table shows some cool cross-category connections, particularly centered around Leonardo da Vinci. The highest similarity (0.732) is between da Vinci and the Mona Lisa, which makes intuitive sense given he painted it. Following this are strong connections between da Vinci and other Renaissance artworks like The School of Athens (0.699) and The Creation of Adam (0.648), suggesting that the language used to describe both the artist and his contemporary artworks shares significant overlap.

Da Vinci also maintains moderate similarities (around 0.61-0.63) with various Renaissance masterpieces like The Birth of Venus, The Last Supper, and The Last Judgment. This consistent range of similarities suggests that the language model captures both da Vinci's role as an artist and his place in the broader Renaissance art movement, even when comparing him to works he didn't create. There's also a broader Renaissance theme, with works like Primavera (0.572) and The Arnolfini Portrait (0.564), perhaps capturing the shared artistic period.

Since there are no animals in that list, let’s see how the model handles connections between animals and other categories. Here are the top 5 pairs that has an animal in it:

The similarities here are notably lower (around 0.3). This suggests that while the model can capture some relationship between animals and cultural artifacts, these connections are much weaker than those within cultural categories like art and historical figures.4

Name vs. Nature: When Words Meet Their Descriptions

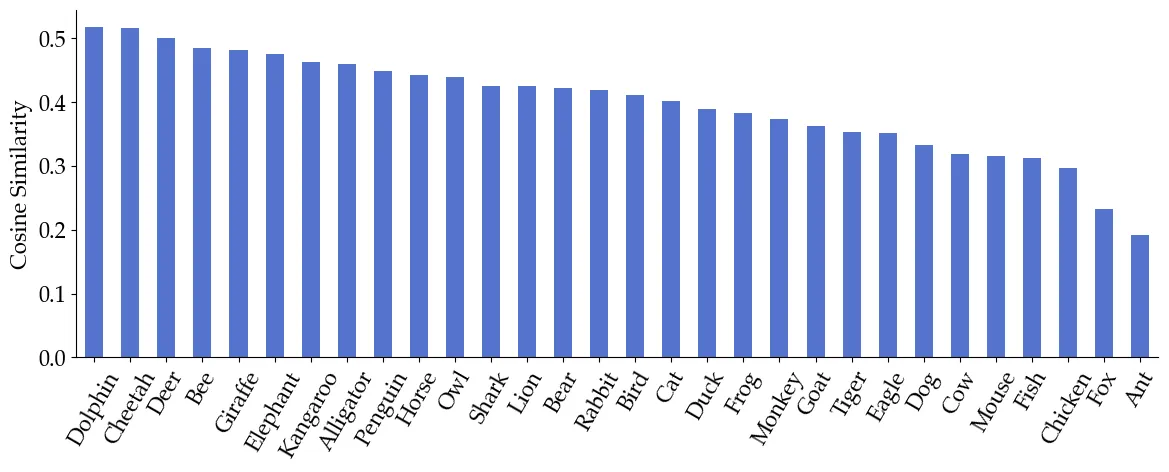

Now here's where things get meta. We can also compare the embedding of just the word "lion" with the embedding of our detailed lion description. The greater the similarity, the closer our structured descriptions match the common understanding of these animals. The figure below shows the similarity between these two embeddings for each animal:

One striking observation is how low these similarities are overall - even the highest scores barely reach 0.5. Another pattern seems to be that generic animal names (dog, fish) show lower similarities than specific ones (Dophin, Cheetah) — presumably, this is because the word "bird" has to capture everything from hummingbirds to ostriches, while "penguin" refers to a more specific set of characteristics.

We can do a variant of this exercise. I fed in the description to ChatGPT and asked it to guess the object based on the description. Across the 90 objects, ChatGPT successfully guessed all but one — based on the description of Primavera, it incorrectly guessed it as the Birth of Venus. This confusion is quite understandable given that both paintings were created by Botticelli, share similar mythological themes, and feature similar artistic techniques.

In fact, if we look at our similarity tables from earlier, these two paintings had one of the highest similarity scores, suggesting that their descriptions capture many overlapping characteristics. This raises an interesting question about how we describe art — perhaps our language struggles to capture the subtle visual differences that make each masterpiece unique. While an art historian might immediately spot the distinctive elements of each painting, the verbal descriptions we use might not fully convey these nuances.

Finding Your Spirit Object

With this apparatus in place, we can now build on the Buzzfeed survey. The idea is to write something about yourself, obtain its embedding, and then find the object — animal, historical figure, or painting — that is closest in the embedding space.

Let’s first try it on the historical figures in our data. The table below shows the cross-category comparisons between 10 historical figures and their most and least similar animals and paintings.

For animals, the elephant appears as the closest animal for many historical figures - particularly leaders and intellectuals like Washington, Hammurabi, Edison, Genghis Khan, Aristotle, and Einstein. This consistent association might reflect shared qualities like intelligence, power, or memorability. In contrast, the farthest animals show more variation but tend toward smaller or less imposing creatures like penguins, rabbits, and chickens.

The painting associations are equally interesting. "Liberty Leading the People" appears as the closest painting for several political figures (Washington, Lenin, Mandela, Franklin), suggesting the embedding model captures thematic connections between revolutionary or leadership figures and artistic depictions of political liberation. Meanwhile, "The School of Athens" shows up as the closest painting for intellectual figures like Einstein and Aristotle, which makes sense given the painting's celebration of philosophy and knowledge.

Now, let's try this approach on a more personal level! I first entered the following description about myself (which is generated by me, not ChatGPT):

I’m a finance professor at Columbia Business School, originally from Korea. I studied finance and electrical engineering in undergrad. I’m a big Arsenal fan and enjoy reading books with a mythical or surreal twist, like those by Borges or Auster. I also like watching animal documentaries and think Pokémon doesn’t get enough credit for the strategic element in its battles. I listen to all kinds of music, except for EDM. I don’t like loud places and find that I handle cold weather much better than heat, which suits my preference for quieter, more relaxed environments.

I then convert this description into an embedding and find the top 3 matches for each category:

According to this, my spirit animal is a horse; my spirit historical figure is a mix of Albert Einstein and Ben Franklin; and my spirit painting is a mix of The Persistence of Memory, The Blue Boy, and The Nighthawks. I’d say this is pretty good because Ben Franklin and Nighthawks are definitely on my list of favorites.

The horse match initially puzzled me, but thinking about it, horses are known to be more cognitively advanced than we usually think and also social animals that need their space. Perhaps the embedding picked up on these subtle personality traits rather than more obvious characteristics.

Of course, like any good Buzzfeed quiz, we shouldn't take these results too seriously. But they do highlight how embeddings can surface connections that aren't immediately obvious – sometimes even surprising the person being analyzed!5

Conclusion

Takeaways

Embeddings provide a powerful framework for comparing seemingly incomparable things. By converting descriptions into numerical vectors, we can discover meaningful connections that might not be apparent or challenging to quantify.

Language models can be effective tools for generating structured, consistent datasets. With careful prompt engineering, we can create detailed, standardized descriptions that maintain parallel structure across very different categories. This opens up new possibilities for creating research datasets without relying on manual annotation.

Cross-category comparisons reveal surprising patterns and connections. While some matches were intuitive (like da Vinci and the Mona Lisa), others uncovered unexpected relationships that make sense upon reflection.

Further Research Questions

One limitation of the current approach is that it only relies on textual data. We can also incorporate image embeddings — imagine matching your personality not just to a painting's description, but to its visual elements. We could analyze whether visual or textual features better capture certain personality traits. This can be done using multimodal embeddings, which can include a combination of image, text, and video as inputs.

One may also use this framework to study representation biases. By analyzing which historical figures consistently match with certain traits (like "leadership" or "intelligence"), we might uncover systematic patterns in how different genders, cultures, and time periods are described and perceived. This could provide a news lens to studying historical narratives and their modern interpretations.

I was told that this was also how the ranking worked when I was hired for my current job.

And just like how you might trust two independent friends more if they point to the same thing, when multiple embedding dimensions (our mathematical "fingers") point in similar directions, we get higher similarity scores. This is why embeddings can capture subtle connections — they're like having hundreds of different perspectives all voting on how similar two concepts are.

Although I'm making it sound like these numbers are completely mysterious, there's actually fascinating research happening in AI interpretability that tries to understand what these vectors mean.

One interesting finding is that horses show up as the top animal match for these paintings and historical figures. According to Claude, this could be because horses are historically important (military tools, transportation, many historical figures riding horses), their ubiquitous art presence (appearing in everything from cave paintings to Renaissance masterpieces), and the symbolic overlap (terms like “noble,” “graceful",” and “majestic” are often commonly used to describe both horses and historical figures).

In fact, this kind of "personality prediction" has some serious implications beyond just fun quizzes. There’s a recent academic work showing how big data and AI can flip traditional information asymmetries - like how insurance companies can now know more about you than you know about yourself. The difference is that while Buzzfeed tells you you're a Chandler when you think you're a Ross, insurance companies might predict your health risks better than your own doctor.